Language Online: Social Media Censoring, Teaching AI and Hate Speech

Sometimes when we go on social media it can be a bit like coming face to face with all the hatred in the world at once. Be it fandom wars and celebrity arguments, or global politics and blanket discrimination, there is nowhere to escape the vitriol of self-appointed experts who comment with nothing but abuse because they think their voices should be heard.

Along with teaching each other this hateful language, our views are seeping into the supposedly neutral thoughts of AI. Here is what is happening and what ways are being proposed to help.

Table of Contents

GIF via Giphy

Recognition

In an ideal world, which is not the world we live in, people would go online and just… not be hateful. We wouldn’t have racism, misogyny, or any form of bigotry or discrimination at all. It is a hope and a dream that seems unattainable, unless we all become better people overnight.

So, as humans, what do we do, at least in 2018? We turn to our machines. Teaching AI to tell the difference between what is hate speech and what isn’t has been a subject of discussion for years. What is needed is a way to get AI to recognise and filter out this abusive language so that when we use social media and so on, what we see is what we want to see, not what other people are determined to make us see. Controversial? It wouldn’t be a hot topic if it weren’t!

A case study

Or more like an example of when things go terribly wrong: in March 2016, Microsoft launched an AI chatbot called Tay via Twitter that could interact with and reply to followers through memes and canned answers. Within less than a day of her launch, Tay was taken down. Tay was targeted by a number of trolls with discriminatory views; in particular those that were anti-semitic. Tay’s interactions with those followers went from a simple suggestion linking Jewish people to 9/11 causing Tay’s algorithms to seek out information to support her replies, to an outpouring abuse and intolerance.

Learning a new language? Check out our free placement test to see how your level measures up!

Navigating language

An algorithm that can identify hate speech and remove it from our various feeds is the optimum solution, but in a world where freedom of speech has become synonymous with justification to abuse and bully, how does AI distinguish between comments made in jest from real points of view? When there are those among us who enjoy using offensive language as banter or use derogatory terms for our loved ones for fun, how can a machine, or a piece of software, tell the difference?

Photo via Flickr

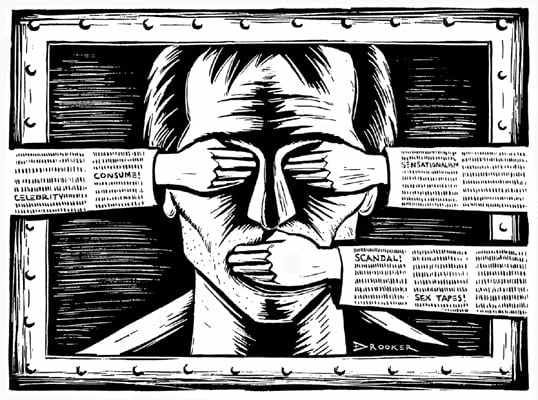

Censorship

One method of attempting to abolish all hateful language has been for companies to completely censor sensitive words. And while this in all likelihood was done with good intentions, the actual results have gone on to do a lot of damage. Take YouTube, for example, whose algorithm changes marked any LGBT content as explicit when viewed in restricted mode, and Tumblr doing the same for their safe mode users. These mistakes were rectified after complaints, but the damage was already done, and many thought the message was loud and clear; one of intolerance and prejudice.

Other solutions

Perhaps there isn’t one perfect solution, but there are an ongoing series of attempts to make things better. A team from McGill University in Montreal taught machine learning software to identify hate speech by comparing the language used by hateful and non-hateful communities online. This targeted more the use of certain words rather than the words themselves, aiming to avoid the censorship issues mentioned above.

A similar approach was adopted by a group from Rochester University, designing algorithms that detected hate codes that manage to avoid detection by using what is considered as a neutral word for an inflammatory or derogatory one.

GIF via Giphy

Jigsaw, a Google company, has developed Perspective, an algorithm that scores comments on their toxicity. GLAAD are currently working with Jigsaw to help determine slurs against the LGBT community from phrases that are only representational to help tackle the hate language problem.

Twitter is attempting to do its part by filtering out certain abusive language, although many users say it is censoring the wrong types of language, focusing on profanities and yet still allowing hateful language against minority groups. And finally, Mark Zuckerberg has announced that AI for Facebook will be able to successfully identify internet hate speech within the next five to ten years.

In short, efforts are being made, and though there are those that will argue they are nowhere near enough, we have to start the fight against abusive online language somewhere. Or maybe, we could all just become better people, and stop being so unpleasant with the way we speak! One day, perhaps, one day.